2. Preliminaries

In the Preliminaries chapter, we will introduce some base coding and data analysis.

2.1 Introduction to Python

This section covers essential Python skills, including setting up your environment, understanding basic syntax, and using packages to aid data analysis. This foundational knowledge is valuable for beginners and will support more advanced data analysis in later sections.

2.1.1 Setting up Python Environment

Option 1: Using Google Colab

Google Colab is a cloud-based platform for running Python code in what are called notebooks, which section code in to small chunks that can be run independently. Dividing a program into small sections is helpful for data science and machine learning. Follow these steps to get started. Using Google Colab allows you to run Python code in a flexible, collaborative environment without any local setup. It’s particularly useful for working with large datasets or sharing notebooks with others.

Step 1: Access Google Colab

- Open Google Colab: Go to Google Colab.

- Sign in with Google: Log in with your Google account to access and save notebooks in Google Drive.

Step 2: Create or Open a Notebook

- Create a New Notebook:

- Click on File > New notebook to open a blank notebook.

- Open an Existing Notebook:

- Choose File > Open notebook. You can load notebooks from Google Drive, GitHub, or your computer.

Step 3: Set Up and Run Code

- Using Code Cells:

- Colab organizes code into cells. To run a cell, click on it and press Shift + Enter or click the Play button.

- Installing Packages

Colab has many libraries installed by default. You can install additional packages if needed usingpipcommands within a cell.

# Install additional libraries

!pip install some_package

| Library | Purpose | Pip Install Command |

|---|---|---|

rdkit |

Cheminformatics tasks: SMILES, molecular fingerprints, 3D coordinates, etc. | pip install rdkit-pypi |

pandas |

Data manipulation and analysis. | pip install pandas |

numpy |

Numerical computing support. | pip install numpy |

matplotlib |

Data visualization (histograms, plots, etc.). | pip install matplotlib |

seaborn |

Advanced statistical plotting. | pip install seaborn |

scikit-learn |

Machine learning: regression, clustering, model evaluation. | pip install scikit-learn |

scipy |

Scientific computing: statistical tests (e.g., f_oneway), optimization, etc. |

pip install scipy |

plotly |

Interactive plots and dashboards. | pip install plotly |

Step 4: Save and Export Your Work

- Saving to Google Drive:

- Your Colab notebooks will automatically save to Google Drive. You can access them later under Colab Notebooks in Drive.

- Downloading Notebooks:

- To keep a copy on your computer, go to File > Download > Download .ipynb.

Step 5: Loading Files and Datasets in Colab

- Mount Google Drive:

- Run the following code to access your files on Google Drive. After running, authorize access to your Drive.

from google.colab import drive drive.mount('/content/drive') - Load Local Files:

- Use Colab’s file upload feature by clicking the File icon on the left sidebar, then selecting Upload Notebook.

Step 6: Adding and Executing Markdown Cells

- Adding Markdown for Documentation:

- To add notes, explanations, or instructions in text, you can insert a Markdown cell by clicking + Text in the toolbar.

Tips for Chemists Using Colab

- Managing Data Files: Store datasets in Google Drive to access them easily across multiple sessions.

- Running Long Calculations: Colab may disconnect if idle. To prevent data loss, make sure to save work frequently.

- Collaborative Editing: Share Colab notebooks with colleagues for real-time collaboration by clicking Share in the top-right corner.

Option 2: Installing Anaconda and Jupyter Notebook

To get started with Python, we’ll set up a development environment using Anaconda and Jupyter Notebook.

-

Anaconda: A package manager and environment manager commonly used for data science. It simplifies package installation and management.

-

Jupyter Notebook: An interactive environment ideal for data exploration and analysis. Jupyter Notebooks can be launched directly from Anaconda.

Here’s a detailed guide on installing Anaconda on different operating systems. Each step is tailored for Windows, macOS, and Linux to ensure a smooth setup.

Installing Anaconda on Windows, macOS, and Linux

Download Anaconda

- Go to the Anaconda Download Page:

- Visit the Anaconda download page.

- Select Your Operating System:

- Choose the appropriate installer for your OS: Windows, macOS, or Linux.

- Select the Python 3.x version (e.g., Python 3.9 or 3.10) for the latest stable release.

- Anaconda may detect your operating system and only give one option to download.

Windows Installation Instructions

- Run the Installer:

- Open the downloaded

.exefile. - Click Next on the Welcome screen.

- Open the downloaded

- Agree to the License Agreement:

- Read the agreement, then click I Agree.

- Select Installation Type:

- Choose Just Me unless multiple users need access.

- Choose Installation Location:

- Choose the default or specify a custom path.

- Avoid spaces or special characters in the path if choosing a custom location.

- Advanced Installation Options:

- Check Add Anaconda to my PATH environment variable (optional but not recommended due to potential conflicts).

- Ensure Register Anaconda as my default Python 3.x is selected, so Anaconda’s Python is used by default.

- Complete the Installation:

- Click Install and wait for the process to finish.

- Once complete, you can choose to open Anaconda Navigator or continue with manual setup.

- Verify the Installation:

- Open Anaconda Prompt from the Start Menu.

- Type

conda --versionto verify the installation. - Launch Jupyter Notebook by typing

jupyter notebook.

macOS Installation Instructions

- Run the Installer:

- Open the downloaded

.pkgfile. - Follow the prompts on the installer.

- Open the downloaded

- Agree to the License Agreement:

- Review and agree to the terms to proceed.

- Choose Installation Location:

- By default, Anaconda is installed in the

/Users/username/anaconda3directory.

- By default, Anaconda is installed in the

- Advanced Options:

- You may be asked if you want Anaconda’s Python to be your default Python.

- Choose Yes to add Anaconda to your PATH automatically.

- Complete the Installation:

- Wait for the installation to complete, then close the installer.

- Verify the Installation:

- Open Terminal.

- Type

conda --versionto verify that Anaconda is installed. - Launch Jupyter Notebook by typing

jupyter notebook.

Linux Installation Instructions

-

Open Terminal.

- Navigate to the Download Directory:

- Use

cdto navigate to where you downloaded the Anaconda installer.

cd ~/Downloads - Use

- Run the Installer:

- Run the installer script. Replace

Anaconda3-202X.X.X-Linux-x86_64.shwith your specific file name.

bash Anaconda3-202X.X.X-Linux-x86_64.sh - Run the installer script. Replace

- Review the License Agreement:

- Press Enter to scroll through the agreement.

- Type

yeswhen prompted to accept the agreement.

- Specify Installation Location:

- Press Enter to accept the default installation path (

/home/username/anaconda3), or specify a custom path.

- Press Enter to accept the default installation path (

- Add Anaconda to PATH:

- Type

yeswhen asked if the installer should initialize Anaconda3 by runningconda init.

- Type

- Complete the Installation:

- Once installation is finished, restart the terminal or use

source ~/.bashrcto activate the changes.

- Once installation is finished, restart the terminal or use

- Verify the Installation:

- Type

conda --versionto confirm that Anaconda is installed. - Launch Jupyter Notebook by typing

jupyter notebook.

- Type

Post-Installation: Launch Jupyter Notebook

- Open Anaconda Prompt (Windows) or Terminal (macOS/Linux).

- Start Jupyter Notebook:

- Type

jupyter notebookand press Enter. - Jupyter Notebook will open in your default web browser, allowing you to create and run Python code interactively.

- Type

2.1.2 Basic Syntax and Structure

Python’s simple syntax makes it a powerful and beginner-friendly language for data analysis. Here, we’ll cover core aspects:

Variables, Loops, and Functions

Variables: Used to store data. You can define a variable by simply assigning it a value.

# Defining variables

compound_name = "Aspirin"

molecular_weight = 180.16

Loops: Used to perform repetitive tasks.

# For loop example

for i in range(3):

print(f"Compound {i+1}")

Functions: Functions in Python allow you to reuse blocks of code and organize your script.

# Function to calculate the molecular weight ratio

def molecular_weight_ratio(compound_weight, standard_weight=100):

return compound_weight / standard_weight

print(molecular_weight_ratio(molecular_weight))

Data Types and Mathematical Operations

Data Types: How data such as a variable is represented to and stored in the computer.

- string type: Data meant to be interpreted literally

- integer type: Data meant to be stored as an integer

- float type: Data meant to be stored as a floating point number with decimal precision

Example Code

# Display the data type of variables

my_string = "10"

my_int = 10

my_float = 10.0

print(type(my_string))

print(type(my_int))

print(type(my_float))

Output

<class 'str'>

<class 'int'>

<class 'float'>

Mathematical Operations: The four regular mathematical operations can be used on integer and float type variables and order of operations is followed.

- Addition with the “+” character

- Substraction with the “-“ character

- Multiplication with the “*” character

- Division with the “/” character

Example Code

# Use the mathematical operators

my_int = 10

print(my_int * 3 / 2 + 1 - 3)

Output

13.0

Basic Printing Techniques in Python

Print commands are used in most programming languages to display the output of code that has been run. Printing is essential for checking code functionality, displaying calculations, and formatting data. Here are a few common ways to print in Python, along with examples that can help navigate real-world coding scenarios.

Simple Print Statements

Explanation: The print() function displays text or values to the screen. You can print variables or text strings directly.

Example Code

# Basic print

print("Welcome to Python programming!")

# Printing a variable

compound_name = "Aspirin"

print("Compound:", compound_name)

Output

Welcome to Python programming!

Compound: Aspirin

Using f-strings for Formatted Output

Explanation: Python’s formatted strings known as f-strings make it easy to display the value of a variable along with or embedded in other text, which simplifies displaying complex data clearly.

Example Code

compound_name = "Aspirin"

molecular_weight = 180.16

print(f"The molecular weight of {compound_name} is {molecular_weight}")

Output

The molecular weight of Aspirin is 180.16

Concatenating Strings and Variables

Explanation: Concatenating, or combining strings and variables is possible using the + operator, but the variable must first be converted to a string.

Example Code

print("The molecular weight of " + compound_name + " is " + str(molecular_weight))

Output

The molecular weight of Aspirin is 180.16

Formatting Numbers

Explanation: To control the display of floating-point numbers (e.g., limiting decimal places), use formatting options within f-strings.

Example Code

molecular_weight = 180.16

# Display molecular weight with two decimal places

print(f"Molecular weight: {molecular_weight:.2f}")

Output

Molecular weight: 180.16

Practice Problem

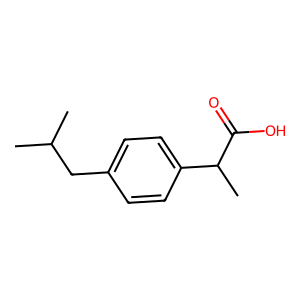

Write a program to define variables for the name and molecular weight of the active compound in Ibuprofen. Display the information using each print method above.

▶ Show Solution Code

compound_name = "Ibuprofen"

molecular_weight = 206.29

# Simple print

print("Compound:", compound_name)

# f-string

print(f"The molecular weight of {compound_name} is {molecular_weight}")

# Concatenation

print("The molecular weight of " + compound_name + " is " + str(molecular_weight))

# Formatting numbers

print(f"Molecular weight: {molecular_weight:.2f}")

2.1.3 Python Packages

Python packages are pre-built libraries that simplify data analysis. Here, we’ll focus on a few essential packages for our work.

Key Packages

- NumPy: A popular package for numerical computing, especially helpful for handling arrays and performing mathematical operations.

- Pandas: A popular library for data manipulation, ideal for handling tabular data structures like the contents of a spreadsheet.

- Matplotlib and Seaborn: Libraries for data visualization in plots and figures.

Example Code to Install and Import Packages

# Installing packages

!pip install numpy pandas matplotlib seaborn

# Importing packages

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

To load data from any file into your program, the program needs to know where to find the file. This can be accomplished in one of two ways. In the following example, we will load a text file.

-

Place the file inside the same folder as your program and open the file by its name:

# Loading data from a text file in the same location as your program with open('data.txt') as f: data = f.read() -

Find the full directory path to your file and provide this as the file name. In Windows, you can right click a file in File Explorer and click on “Copy as path” to copy the full directory path to your file. For example, if user coolchemist has the file ‘data.txt’ in their Desktop folder, the code to load this file might look like this:

# Loading data from a text file in the Desktop folder of user coolchemist with open('C:\Users\coolchemist\Desktop\data.txt') as f: data = f.read()

Practice Problem:

Problem: Write Python code to create a variable for a compound’s molecular weight and set it to 180.16. Then create a function that doubles the molecular weight.

▶ Show Solution Code

# Define a variable for molecular weight

molecular_weight = 180.16

# Function to double the molecular weight

def double_weight(weight):

return weight * 2

# Test the function

print(f"Double molecular weight: {double_weight(molecular_weight)}")

Output

Double molecular weight: 360.32

2.2 Data Analysis with Python

In this chapter, we’ll explore how to use Python for data analysis, focusing on importing and managing datasets commonly encountered in chemistry. Data analysis is a crucial skill for chemists, allowing you to extract meaningful insights from experimental data, predict outcomes, and make informed decisions in your research. Effective data analysis begins with properly importing and managing your datasets. This section will guide you through loading data from various file formats, including those specific to chemistry, and handling data from databases.

2.2.1 Loading Data from Various File Formats

Completed and Compiled Code: Click Here

Reading Data from CSV

Explanation:

CSV (Comma-Separated Values) and Excel files are common formats for storing tabular data. Python’s pandas library provides straightforward methods to read these files into DataFrames, which are powerful data structures for working with tabular data. A DataFrame is what is known in programming as an object. Objects contain data organized in specific defined structures and have properties that can be changed and used by the programmer.

Think of it as a variable that can store more complex information than a few words or a number. In this instance, we will store data tables as a DataFrame object. When the data table is read into a pandas DataFrame, the resulting object will have properties and functions built into it. For example, a substrate scope table can be read into a DataFrame and statistical analysis can be performed on the yield column with only a few lines of code.

Example Code:

import pandas as pd

# Reading a CSV file into a DataFrame called "csv_data"

csv_data = pd.read_csv('experimental_data.csv')

# Reading an Excel file into a DataFrame called "excel_data"

excel_data = pd.read_excel('compound_properties.xlsx', sheet_name='Sheet1', index_col=0)

Explanation of the Code:

pd.read_csv()reads data from a CSV file into a DataFrame.pd.read_excel()reads data from an Excel file. Thesheet_nameparameter specifies which sheet to read. Theindex_colparameter tells pandas which column to use as the row index.

Note: What is index_col?

When loading an Excel file with pandas.read_excel(), pandas automatically assigns row numbers like 0, 1, 2 as the index. But sometimes, the first column of your file already contains meaningful labels—like wavenumbers, timestamps, or compound IDs. In that case, you can tell pandas to use that column as the row index using index_col.

Without index_col

Suppose your Excel file starts with a column named “Wavenumber”, followed by data columns like “Time1” and “Time2”. If you don’t set index_col, pandas reads all columns as data. It then adds its own row numbers—0, 1, 2—on the side. The “Wavenumber” column will just be treated as another data column.

With index_col=0

By setting index_col=0, you’re telling pandas to treat the first column—“Wavenumber”—as the index. That means each row will now be labeled using the values in the “Wavenumber” column (e.g., 400, 500, 600). This is especially useful when the first column isn’t a feature but a meaningful label.

Reading CSVs via File Upload or Link

You can read CSV files in two ways:

Method 1: Upload the file manually (e.g., in Jupyter or Google Colab)

Download the BBBP.csv File: Click Here

from google.colab import files

uploaded = files.upload()

import pandas as pd

df = pd.read_csv('BBBP.csv')

# .head() gets the first 5 rows

print(df.head())

Method 2: Load the file directly from a GitHub raw link

This method allows your code to be instantly runnable without needing to manually upload files.

import pandas as pd

# Loading the dataset

url = 'https://raw.githubusercontent.com/Data-Chemist-Handbook/Data-Chemist-Handbook.github.io/refs/heads/master/_pages/BBBP.csv'

df = pd.read_csv(url)

print(df.head())

Output

num name p_np smiles

0 1 Propanolol 1 [Cl].CC(C)NCC(O)COc1cccc2ccccc12

1 2 Terbutylchlorambucil 1 C(=O)(OC(C)(C)C)CCCc1ccc(cc1)N(CCCl)CCCl

2 3 40730 1 c12c3c(N4CCN(C)CC4)c(F)cc1c(c(C(O)=O)cn2C(C)CO...

3 4 24 1 C1CCN(CC1)Cc1cccc(c1)OCCCNC(=O)C

4 5 cloxacillin 1 Cc1onc(c2ccccc2Cl)c1C(=O)N[C@H]3[C@H]4SC(C)(C)...

Note: Method 2 works for CSVs but not for Excel files

CSV files are plain text files. When you use a raw.githubusercontent.com link, you’re simply accessing that text over the internet, and pandas.read_csv() can parse it just like it would read from a local file.

Excel files (.xlsx), however, are binary files, not plain text. GitHub doesn’t serve .xlsx files with the correct content type to let pd.read_excel() work directly from the URL. Instead, you need to manually download the file into memory using the requests library and wrap it with BytesIO, like this:

import pandas as pd

import requests

from io import BytesIO

url = 'https://raw.githubusercontent.com/Data-Chemist-Handbook/Data-Chemist-Handbook.github.io/master/_pages/LCRaman_data.xlsx'

response = requests.get(url)

excel_data = BytesIO(response.content)

df = pd.read_excel(excel_data, sheet_name='Sheet1', index_col=0)

print(df.head())

Output

time 4.5 4.533333 ... 7.433333 7.466667

40mM amino acids

wavenumber/cm-1 NaN NaN NaN ... NaN NaN

325.65 NaN 0.003956 0.003982 ... 0.012759 0.014740

327.651677 NaN 0.005396 0.004230 ... 0.012213 0.014480

329.652707 NaN 0.004024 0.005562 ... 0.012120 0.012711

331.65309 NaN 0.003313 0.005206 ... 0.009913 0.011362

[5 rows x 91 columns]

2.2.2 Data Cleaning and Preprocessing

Completed and Compiled Code: Click Here

Handling Missing Values and Duplicates

Because datasets often combine data from multiple sources or are taken from large databases, they need to be processed before being analyzed to prevent using incomplete or incorrect data. The processing is called cleaning and can be done with the help of built in functions rather than through manual fixing.

Explanation:

Data cleaning can be done with built in functions of the DataFrame object. This example uses fillna() to fill missing values with specified values and drop_duplicates() to remove duplicate rows from the DataFrame.

Example Code:

import pandas as pd

# Create a sample dataset with some missing 'name' values and duplicate 'smiles'

data = {

'name': ['Aspirin', None, 'Ibuprofen', 'Aspirin', None],

'smiles': ['CC(=O)OC1=CC=CC=C1C(=O)O', 'CC(=O)OC1=CC=CC=C1C(=O)O', 'CC(C)CC1=CC=C(C=C1)C(C)C(=O)O', '', '']

}

df = pd.DataFrame(data)

# Number of rows before removing duplicates

initial_row_count = len(df)

# Count how many rows have missing values in the 'name' column using isna() (checks for NaN) and sum() (counts total)

missing_name_count = df['name'].isna().sum()

# Handle missing values

df_filled = df.fillna({'name': 'Unknown', 'smiles': ''})

# Remove duplicate rows based on the 'smiles' column; 'subset' specifies which column(s) to consider for identifying duplicates

df_no_duplicates = df_filled.drop_duplicates(subset=['smiles'])

# Final row count after removing duplicates

final_row_count = len(df_no_duplicates)

# Print results

print(f"Number of rows before removing duplicates: {initial_row_count}")

print(f"Number of rows with missing names (filled as 'Unknown'): {missing_name_count}")

print(f"Number of rows after removing duplicates: {final_row_count}")

Output

Number of rows before removing duplicates: 5

Number of rows with missing names (filled as 'Unknown'): 2

Number of rows after removing duplicates: 3

Practice Problem:

We will clean the dataset by filling missing name values with 'Unknown', removing rows without smiles values, and removing any duplicate entries based on smiles.

Given a DataFrame with missing values:

- Fill missing values in the

namecolumn with'Unknown'and in thesmilescolumn with an empty string. - Remove any duplicate rows based on the

smilescolumn.

▶ Click to show considerations

In this practice problem, we removed data rows that did not contain `smiles` info, but what if we wanted to attempt to fill in the data based on the name? There are ways to do this through other python packages, such as pubchempy.Data Type Conversions

Explanation: Converting data types to the desired type for a given data category enables proper representation of the data for performing mathematical calculations or comparisons. This is necessary when data is imported with incorrect types (e.g., numbers stored as strings).

Example Code:

import pandas as pd

# Example DataFrame with mixed types

data = {'product': [1, 2, 3],

'milligrams': ['10.31', '8.04', '3.19'],

'yield': ['75', '46', '32']}

df = pd.DataFrame(data)

print("the data types before conversion are:")

print(str(df.dtypes) + "\n")

# Converting 'product' to string

df['product'] = df['product'].astype(str)

# Converting 'milligrams' to float

df['milligrams'] = df['milligrams'].astype(float)

# Converting 'yield' to integer

df['yield'] = df['yield'].astype(int)

print("the data types after conversion are:")

print(str(df.dtypes))

Output

the data types before conversion are:

product int64

milligrams object

yield object

dtype: object

the data types after conversion are:

product object

milligrams float64

yield int64

dtype: object

Note: In pandas, dtype: object means the column can hold any Python object, most commonly strings.

Practice Problem:

In the BBBP dataset, the num column (compound number) should be treated as an integer, and the p_np column (permeability label) should be converted to categorical data.

- Convert the num column to integer and the p_np column to a categorical type.

- Verify that the conversions are successful by printing the data types.

▶ Show Solution Code

import pandas as pd

# Loading the dataset again

url = 'https://raw.githubusercontent.com/Data-Chemist-Handbook/Data-Chemist-Handbook.github.io/refs/heads/master/_pages/BBBP.csv'

df = pd.read_csv(url)

# Convert 'num' to integer and 'p_np' to categorical

df['num'] = df['num'].astype(int)

df['p_np'] = df['p_np'].astype('category')

# Print the data types of the columns

print(df.dtypes)

Output

num int64

name object

p_np category

smiles object

dtype: object

Normalizing and Scaling Data

Because different features may span very different ranges, it’s often useful to bring them onto a common scale before modeling.

Explanation: Normalization adjusts the values of numerical columns to a common scale without distorting differences in ranges. This is often used in machine learning algorithms to improve model performance by making data more comparable.

Note: In cheminformatics, the term normalization can also refer to structural standardization (e.g., canonicalizing SMILES to avoid duplicates), as discussed in Walters et al., Nature Chemistry (2021). However, in machine learning, normalization usually refers to mathematical feature scaling (e.g., Min–Max or z-score scaling).

Note: Since different features may span very different ranges, it’s often useful to bring them onto a common scale before modeling. Machine learning models expect

- Rows = individual samples or observations (e.g., a single spectrum)

- Columns = features (e.g., intensity at different wavenumbers)

If your data is organized the other way around (e.g., rows as wavenumbers), use

.Tto transpose the DataFrame:df = df.T.

Note: Not every chemical column should be re-scaled.

Physical constants such as boiling-point (°C/K), pH, melting-point, ΔHf, etc. already live on a meaningful, absolute scale; forcing them into 0-1 space can hide or even distort mechanistic trends.

By contrast, measurement-derived signals (e.g. FTIR, Raman or UV-Vis intensities) and vectorised descriptors produced by software (atom counts, fragment fingerprints, Mordred/PaDEL descriptors, etc.) are scalable. Re-scaling these routinely improves machine-learning performance and does not alter the underlying chemistry.

Example Code:

import pandas as pd

from sklearn.preprocessing import MinMaxScaler

# Toy IR-intensity dataset (three peaks per spectrum)

spectra = pd.DataFrame({

"sample": ["spec-1", "spec-2", "spec-3"],

"I_1700cm-1": [ 920, 415, 1280],

"I_1600cm-1": [ 640, 1220, 870],

"I_1500cm-1": [ 305, 510, 460],

})

scaler = MinMaxScaler()

intensity_cols = ["I_1700cm-1", "I_1600cm-1", "I_1500cm-1"]

spectra[intensity_cols] = scaler.fit_transform(spectra[intensity_cols])

print(spectra)

Output: | | sample | I_1700cm-1 | I_1600cm-1 | I_1500cm-1 | |——–|———|————|————|————| | 0 | spec-1 | 0.583815 | 0.000000 | 0.000000 | | 1 | spec-2 | 0.000000 | 1.000000 | 1.000000 | | 2 | spec-3 | 1.000000 | 0.396552 | 0.756098 |

Practice Problem:

We will normalize Raman spectral intensity data using Min–Max scaling, which adjusts values to a common scale between 0 and 1.

- Load LCRaman_data.xlsx from the GitHub repository. This file contains real Raman spectral measurements.

- Transpose the DataFrame to make rows =

spectraand columns =wavenumber intensities. - Apply Min–Max scaling to the intensity values.

- Print the first few rows to verify the normalized values.

▶ Show Solution Code

import pandas as pd

from sklearn.preprocessing import MinMaxScaler

import requests

from io import BytesIO

# Load Raman spectral data from GitHub

url = 'https://raw.githubusercontent.com/Data-Chemist-Handbook/Data-Chemist-Handbook.github.io/master/_pages/LCRaman_data.xlsx'

response = requests.get(url)

excel_data = BytesIO(response.content)

df = pd.read_excel(excel_data, sheet_name="Sheet1", index_col=0)

# Transpose to make rows = spectra, columns = features

spectra = df.T

# Remove any rows (now columns) that aren't numeric (force numeric, NaN if not)

spectra = spectra.apply(pd.to_numeric, errors='coerce')

# Drop columns that are all NaNs (e.g. invalid features)

spectra = spectra.dropna(axis=1, how='all')

# Ensure all column names are strings to avoid errors in scikit-learn, which requires uniform string-type feature names

new_columns = []

for col in spectra.columns:

new_columns.append(str(col))

spectra.columns = new_columns

# Apply Min–Max scaling to all intensity columns

scaler = MinMaxScaler()

spectra_scaled = pd.DataFrame(

scaler.fit_transform(spectra),

index=spectra.index,

columns=spectra.columns

)

print(spectra_scaled.head())

Output

325.65 327.65167664 ... 2033.94965376 2035.29038256

time NaN NaN ... NaN NaN

4.5 0.751313 0.778351 ... 0.821384 0.763345

4.533333 0.751916 0.749897 ... 0.783924 0.634459

4.566667 0.810788 0.814801 ... 0.789397 0.666986

4.6 0.758148 0.819177 ... 0.756293 0.574035

Explanation of the Code: The script starts by loading Raman spectral data from an Excel file hosted on GitHub. It reads the first sheet into a pandas DataFrame, using the first column as the index. At this point, rows represent Raman shifts (wavenumbers), and columns represent sample measurements at various time points or conditions.

To prepare the data for machine learning, the DataFrame is transposed so that each row represents a spectrum (sample), and each column corresponds to a wavenumber feature. This is the standard format expected by most machine learning models: rows as observations, columns as features.

The script then converts all values to numeric using pd.to_numeric(). This step handles any non-numeric entries (e.g., text or metadata), converting them to NaN, which ensures that only valid numerical data is kept for further processing.

Next, columns that contain only NaN values are dropped. These typically result from unmeasured or corrupted wavenumbers and offer no useful information, so removing them helps clean the dataset and reduce dimensionality.

Before scaling, the script ensures all column names are strings. This is important because scikit-learn requires feature names to be of a consistent type. If a mix of float and string column names is present (common when wavenumbers are numeric), it raises a TypeError. Converting all names to strings ensures compatibility.

Finally, Min–Max scaling is applied to normalize all feature values between 0 and 1. This ensures that each wavenumber contributes equally to analyses, preventing features with large values from dominating. The first few rows of the scaled data are printed to verify the transformation.

2.2.3 Data Manipulation with Pandas

Completed and Compiled Code: Click Here

Filtering and Selecting Data

Explanation:

Filtering allows you to select specific rows or columns from a DataFrame that meet a certain condition. This is useful for narrowing down data to relevant sections.

Example Code:

import pandas as pd

# Example DataFrame

data = {'Compound': ['A', 'B', 'C'],

'MolecularWeight': [180.16, 250.23, 320.45]}

df = pd.DataFrame(data)

# Filtering rows where MolecularWeight is greater than 200

filtered_df = df[df['MolecularWeight'] > 200]

print(filtered_df)

Output

Compound MolecularWeight

1 B 250.23

2 C 320.45

Practice Problem 1:

- Filter a DataFrame from the BBBP dataset to show only rows where the

num(compound number) is greater than 500. - Select a subset of columns from the dataset and display only the

nameandsmilescolumns.

▶ Show Solution Code

import pandas as pd

# Loading the BBBP dataset

url = 'https://raw.githubusercontent.com/Data-Chemist-Handbook/Data-Chemist-Handbook.github.io/refs/heads/master/_pages/BBBP.csv'

df = pd.read_csv(url)

# Filtering rows where the 'num' column is greater than 500

filtered_df = df[df['num'] > 500]

# Selecting a subset of columns: 'name' and 'smiles'

subset_df = df[['name', 'smiles']]

print(filtered_df.head())

print() # New line

print(subset_df.head())

Output

num name p_np smiles

499 501 buramate 1 OCCOC(=O)NCc1ccccc1

500 502 butabarbital 1 CCC(C)C1(CC)C(=O)NC(=O)NC1=O

501 503 caffeine 1 Cn1cnc2N(C)C(=O)N(C)C(=O)c12

502 504 cannabidiol 1 CCCCCc1cc(O)c(C2C=C(C)CC[C@H]2C(C)=C)c(O)c1

503 505 capuride 1 CCC(C)C(CC)C(=O)NC(N)=O

name smiles

0 Propanolol [Cl].CC(C)NCC(O)COc1cccc2ccccc12

1 Terbutylchlorambucil C(=O)(OC(C)(C)C)CCCc1ccc(cc1)N(CCCl)CCCl

2 40730 c12c3c(N4CCN(C)CC4)c(F)cc1c(c(C(O)=O)cn2C(C)CO...

3 24 C1CCN(CC1)Cc1cccc(c1)OCCCNC(=O)C

4 cloxacillin Cc1onc(c2ccccc2Cl)c1C(=O)N[C@H]3[C@H]4SC(C)(C)...

Merging and Joining Datasets

Explanation:

Merging allows for combining data from multiple DataFrames based on a common column or index. This is especially useful for enriching datasets with additional information.

Example Code:

import pandas as pd

# Example DataFrames

df1 = pd.DataFrame({'Compound': ['A', 'B'],

'MolecularWeight': [180.16, 250.23]})

df2 = pd.DataFrame({'Compound': ['A', 'B'],

'MeltingPoint': [120, 150]})

# Merging DataFrames on the 'Compound' column

merged_df = pd.merge(df1, df2, on='Compound')

print(merged_df)

Output

Compound MolecularWeight MeltingPoint

0 A 180.16 120

1 B 250.23 150

Practice Problem 2:

- Merge two DataFrames from the BBBP dataset: One containing the

nameandsmilescolumns and another containing thenumandp_npcolumns. - Perform a left join on the

namecolumn and display the result.

▶ Show Solution Code

import pandas as pd

# Loading the BBBP dataset

url = 'https://raw.githubusercontent.com/Data-Chemist-Handbook/Data-Chemist-Handbook.github.io/refs/heads/master/_pages/BBBP.csv'

df = pd.read_csv(url)

# Create two DataFrames

df1 = df[['name', 'smiles']]

df2 = df[['name', 'num', 'p_np']]

# Perform a left join on the 'name' column

merged_df = pd.merge(df1, df2, on='name', how='left')

# Print the merged DataFrame

print(merged_df.head())

Output

name smiles num p_np

0 Propanolol [Cl].CC(C)NCC(O)COc1cccc2ccccc12 1 1

1 Terbutylchlorambucil C(=O)(OC(C)(C)C)CCCc1ccc(cc1)N(CCCl)CCCl 2 1

2 40730 c12c3c(N4CCN(C)CC4)c(F)cc1c(c(C(O)=O)cn2C(C)CO... 3 1

3 24 C1CCN(CC1)Cc1cccc(c1)OCCCNC(=O)C 4 1

4 24 C1CCN(CC1)Cc1cccc(c1)OCCCNC(=O)C 667 1

Grouping and Aggregation

Explanation:

Grouping organizes data based on specific columns, and aggregation provides summary statistics like the sum, mean, or count. This is useful for analyzing data at a higher level.

Example Code:

import pandas as pd

# Example DataFrame

data = {'Compound': ['A', 'A', 'B', 'B'],

'Measurement': [1, 2, 3, 4]}

df = pd.DataFrame(data)

# Grouping by 'Compound' and calculating the sum

grouped_df = df.groupby('Compound').sum()

print(grouped_df)

Output

Measurement

Compound

A 3

B 7

Practice Problem:

- Group the BBBP dataset by

p_npand compute the averagecarbon countfor each group (permeable and non-permeable compounds). - Use multiple aggregation functions (e.g., count and mean) on the

carbon countcolumn.

▶ Show Solution Code

import pandas as pd

# Load dataset

url = 'https://raw.githubusercontent.com/Data-Chemist-Handbook/Data-Chemist-Handbook.github.io/refs/heads/master/_pages/BBBP.csv'

df = pd.read_csv(url)

# Add estimated carbon count from SMILES

df['carbon_count'] = df['smiles'].apply(lambda s: s.count('C'))

# Group by 'p_np' and calculate average carbon count

grouped_df = df.groupby('p_np')['carbon_count'].mean()

# Apply multiple aggregation functions

aggregated_df = df.groupby('p_np')['carbon_count'].agg(['count', 'mean'])

print(grouped_df)

print()

print(aggregated_df)

Output

p_np

0 14.283644

1 14.998724

Name: carbon_count, dtype: float64

count mean

p_np

0 483 14.283644

1 1567 14.998724

Pivot Tables and Reshaping Data

Explanation:

Pivot tables help reorganize data to make it easier to analyze by converting rows into columns or vice versa. This is useful for summarizing large datasets into more meaningful information.

Example Code:

import pandas as pd

# Example DataFrame

data = {'Compound': ['A', 'B', 'A', 'B'],

'Property': ['MeltingPoint', 'MeltingPoint', 'BoilingPoint', 'BoilingPoint'],

'Value': [120, 150, 300, 350]}

df = pd.DataFrame(data)

# Creating a pivot table

pivot_df = df.pivot_table(values='Value', index='Compound', columns='Property')

print(pivot_df)

Output

Property BoilingPoint MeltingPoint

Compound

A 300.0 120.0

B 350.0 150.0

Practice Problem 3:

- Create a pivot table from the BBBP dataset to summarize the average

carbon countfor eachp_npgroup (permeable and non-permeable). - Use the

melt()function to reshape the DataFrame, converting columns back into rows.

▶ Show Solution Code

import pandas as pd

# Load the BBBP dataset

url = 'https://raw.githubusercontent.com/Data-Chemist-Handbook/Data-Chemist-Handbook.github.io/refs/heads/master/_pages/BBBP.csv'

df = pd.read_csv(url)

# Add carbon count derived from SMILES

df['carbon_count'] = df['smiles'].apply(lambda s: s.count('C'))

# Creating a pivot table for 'carbon_count' grouped by 'p_np'

pivot_df = df.pivot_table(values='carbon_count', index='p_np', aggfunc='mean')

# Reshaping the DataFrame using melt

melted_df = df.melt(id_vars=['name'], value_vars=['carbon_count', 'p_np'])

print(pivot_df)

print(melted_df.head())

Output

carbon_count

p_np

0 14.283644

1 14.998724

name variable value

0 Propanolol carbon_count 7

1 Terbutylchlorambucil carbon_count 14

2 40730 carbon_count 9

3 24 carbon_count 11

4 cloxacillin carbon_count 11

2.2.4 Working with NumPy Arrays

Completed and Compiled Code: Click Here

Basic Operations and Mathematical Functions

Explanation:

NumPy is a library for numerical computing in Python, allowing for efficient array operations, including mathematical functions like summing or averaging.

Example Code:

import numpy as np

# Example array

arr = np.array([1, 2, 3, 4, 5])

# Basic operations

arr_sum = np.sum(arr)

arr_mean = np.mean(arr)

print(f"Sum: {arr_sum}, Mean: {arr_mean}")

Output

Sum: 15, Mean: 3.0

Practice Problem 1:

- Create a NumPy array from the

numcolumn in the BBBP dataset. - Perform basic statistical operations like

sum,mean, andmedianon thenumarray.

▶ Show Solution Code

import pandas as pd

import numpy as np

# Load the BBBP dataset

url = 'https://raw.githubusercontent.com/Data-Chemist-Handbook/Data-Chemist-Handbook.github.io/refs/heads/master/_pages/BBBP.csv'

df = pd.read_csv(url)

# Create a NumPy array from the 'num' column

num_array = np.array(df['num'])

# Perform basic statistical operations

num_sum = np.sum(num_array)

num_mean = np.mean(num_array)

num_median = np.median(num_array)

print(f"Sum: {num_sum}, Mean: {num_mean}, Median: {num_median}")

Output

Sum: 2106121, Mean: 1027.3760975609757, Median: 1026.5

Indexing and Slicing

Explanation:

NumPy arrays can be sliced to access subsets of data.

Example Code:

import numpy as np

# Example array

arr = np.array([10, 20, 30, 40, 50])

# Slicing the array

slice_arr = arr[1:4]

print(slice_arr)

Output

[20 30 40]

Practice Problem 2:

- Create a NumPy array from the

numcolumn in the BBBP dataset. - Slice the array to extract every second element.

- Reverse the array using slicing.

▶ Show Solution Code

import pandas as pd

import numpy as np

# Load the BBBP dataset

url = 'https://raw.githubusercontent.com/Data-Chemist-Handbook/Data-Chemist-Handbook.github.io/refs/heads/master/_pages/BBBP.csv'

df = pd.read_csv(url)

# Add carbon count derived from SMILES

df['carbon_count'] = df['smiles'].apply(lambda s: s.count('C'))

# Create a NumPy array from the 'carbon_count' column

carbon_array = np.array(df['carbon_count'])

# Slice the array to extract every second element

sliced_array = carbon_array[::2]

# Reverse the array's contents using slicing

reversed_array = carbon_array[::-1]

print(f"Carbon Array (from carbon_count): {carbon_array}")

print(f"Sliced Array (every second element): {sliced_array}")

print(f"Reversed Array: {reversed_array}")

Output

Carbon Array (from carbon_count): [ 7 14 9 ... 18 22 11]

Sliced Array (every second element): [ 7 9 11 ... 0 15 22]

Reversed Array: [11 22 18 ... 9 14 7]

Reshaping and Broadcasting

Explanation:

Reshaping changes the shape, or dimensions, of an array, and broadcasting applies operations across arrays of different shapes.

Example Code:

import numpy as np

# Example array

arr = np.array([[1, 2, 3], [4, 5, 6]])

# Reshaping the array

reshaped_arr = arr.reshape(3, 2)

# Broadcasting: adding a scalar to the array

broadcast_arr = arr + 10

print(reshaped_arr)

print()

print(broadcast_arr)

Output

[[1 2]

[3 4]

[5 6]]

[[11 12 13]

[14 15 16]]

Practice Problem 3:

- Reshape a NumPy array created from the

numcolumn of the BBBP dataset to a shape of(5, 20)(or similar based on the array length). - Use broadcasting to add 100 to all elements in the reshaped array.

▶ Show Solution Code

import pandas as pd

import numpy as np

# Load the BBBP dataset

url = 'https://raw.githubusercontent.com/Data-Chemist-Handbook/Data-Chemist-Handbook.github.io/refs/heads/master/_pages/BBBP.csv'

df = pd.read_csv(url)

# Create a NumPy array from the 'num' column

num_array = np.array(df['num'])

# Reshaping the array to a (5, 20) shape (or adjust based on dataset length)

reshaped_array = num_array[:100].reshape(5, 20)

# Broadcasting: adding 100 to all elements in the reshaped array

broadcasted_array = reshaped_array + 100

print("Reshaped Array:")

print(reshaped_array)

print("\nBroadcasted Array (after adding 100):")

print(broadcasted_array)

Output

Reshaped Array:

[[ 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18

19 20]

[ 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38

39 40]

[ 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58

59 60]

[ 61 62 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79

80 81]

[ 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99

100 101]]

Broadcasted Array (after adding 100):

[[101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118

119 120]

[121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138

139 140]

[141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158

159 160]

[161 162 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179

180 181]

[182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199

200 201]]

2.2.5 Introduction to Visualization Libraries

Completed and Compiled Code: Click Here

Data visualization is critical for interpreting data and uncovering insights. In this section, we’ll use Python’s visualization libraries to create various plots and charts.

Explanation: Python has several powerful libraries for data visualization, including Matplotlib and Seaborn.

- Matplotlib: A foundational library for static, animated, and interactive visualizations.

- Seaborn: Built on top of Matplotlib, Seaborn simplifies creating informative and attractive statistical graphics.

Example Code:

import matplotlib.pyplot as plt

import seaborn as sns

import plotly.express as px

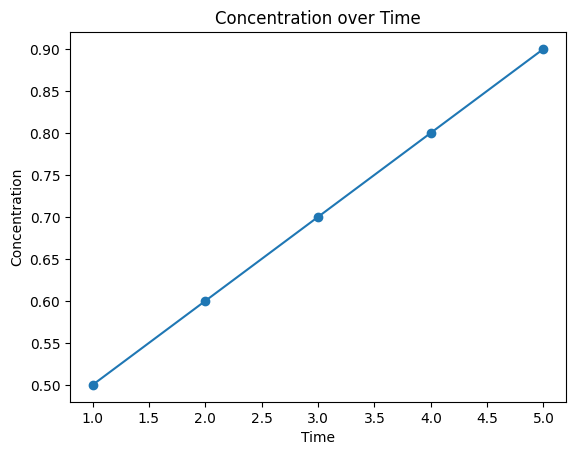

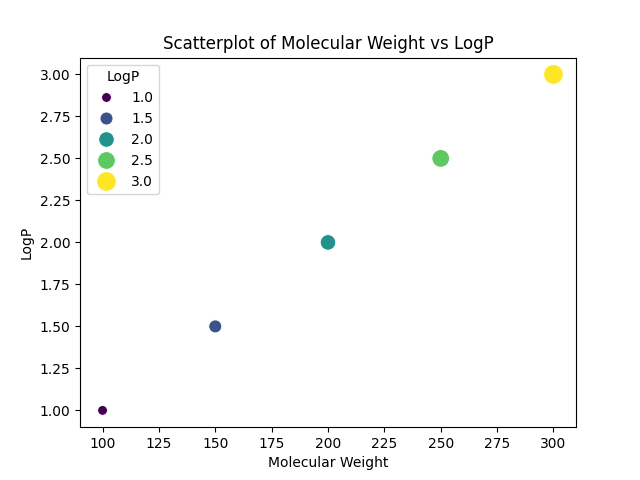

Line and Scatter Plots

Explanation: Line and scatter plots are used to display relationships between variables. Line plots are commonly used for trend analysis, while scatter plots are useful for examining the correlation between two numerical variables.

Example Code for Line Plot:

import matplotlib.pyplot as plt

# Example data

time = [10, 20, 30, 40, 50]

concentration = [0.5, 0.6, 0.7, 0.8, 0.9]

# Line plot

plt.plot(time, concentration, marker='o')

plt.xlabel('Time (s)')

plt.ylabel('Concentration (M)')

plt.title('Concentration vs Time')

plt.show()

Output

Figure: Line Plot of Time vs. Concentration

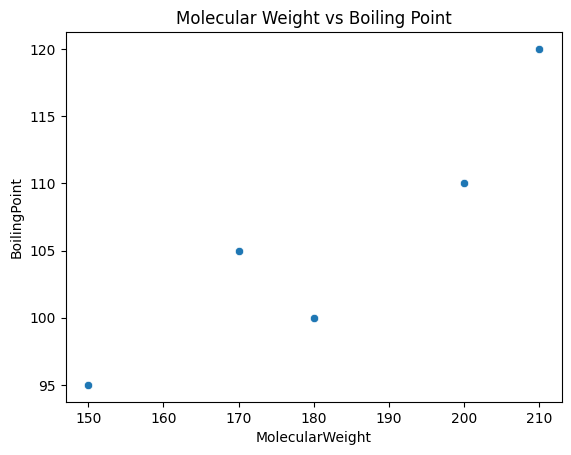

Example Code for Scatter Plot:

import seaborn as sns

import pandas as pd

# Load sample dataset

df = pd.DataFrame({'MolecularWeight': [180, 200, 150, 170, 210],

'BoilingPoint': [100, 110, 95, 105, 120]})

# Scatter plot

sns.scatterplot(data=df, x='MolecularWeight', y='BoilingPoint')

plt.title('Molecular Weight vs Boiling Point')

plt.show()

Output

Figure: Scatter Plot of Molecular Weight vs. Boiling Point

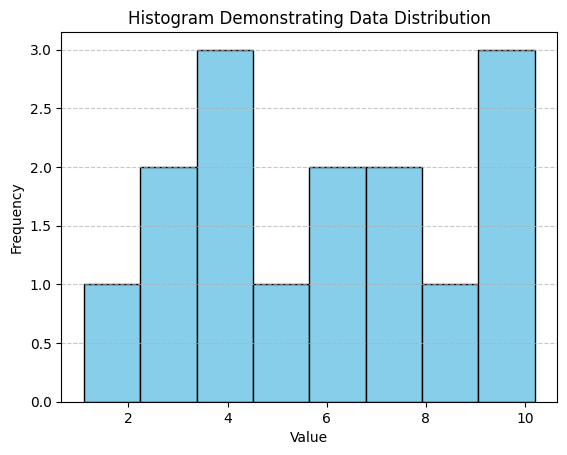

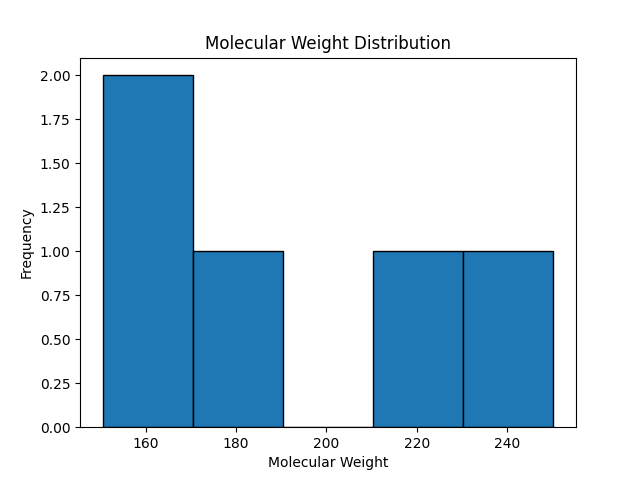

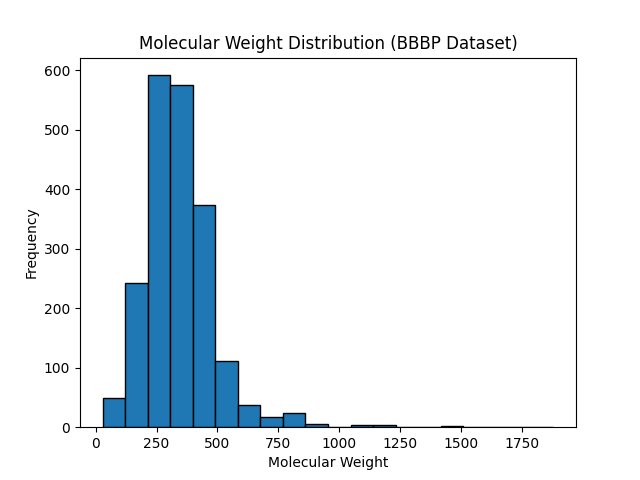

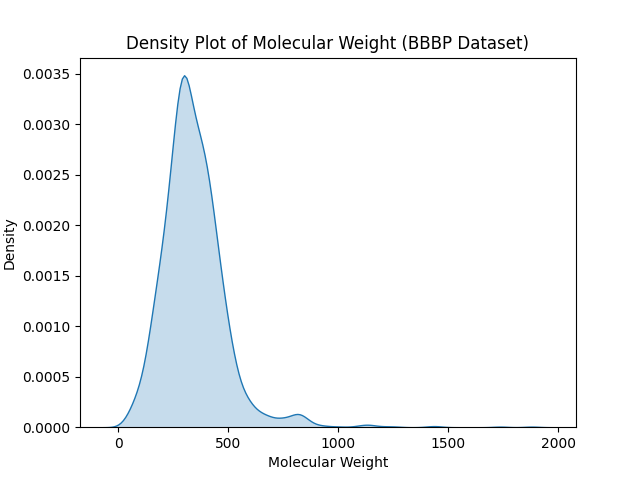

Histograms and Density Plots

Explanation:

Histograms display the distribution of a single variable by dividing it into bins, while density plots are smoothed versions of histograms that show the probability density.

Example Code for Histogram:

import matplotlib.pyplot as plt

# Example data

data = [1.1, 2.3, 2.9, 3.5, 4.0, 4.4, 5.1, 5.9, 6.3, 6.8, 7.2, 8.0, 9.1, 9.7, 10.2]

# Create histogram

plt.hist(data, bins=8, edgecolor='black', color='skyblue')

plt.xlabel('Value')

plt.ylabel('Frequency')

plt.title('Histogram Demonstrating Data Distribution')

plt.grid(axis='y', linestyle='--', alpha=0.7) # Add grid for better readability

plt.show()

Output

Figure: Histogram Demonstrating Data Distribution

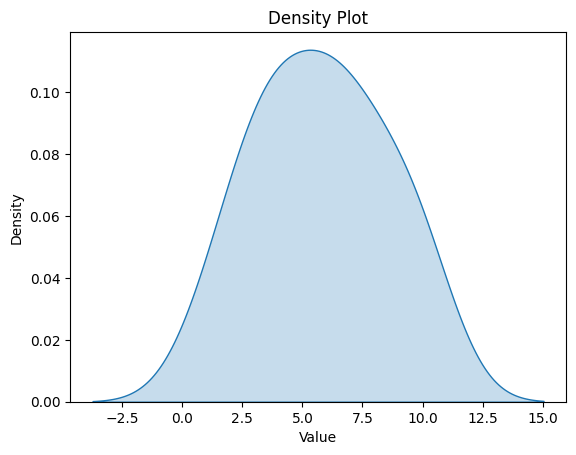

Example Code for Density Plot:

import seaborn as sns

# Example data

data = [1.1, 2.3, 2.9, 3.5, 4.0, 4.4, 5.1, 5.9, 6.3, 6.8, 7.2, 8.0, 9.1, 9.7, 10.2]

# Density plot

sns.kdeplot(data, fill=True)

plt.xlabel('Value')

plt.ylabel('Density')

plt.title('Density Plot')

plt.show()

Output

Figure: Density Plot Visualizing Data Distribution

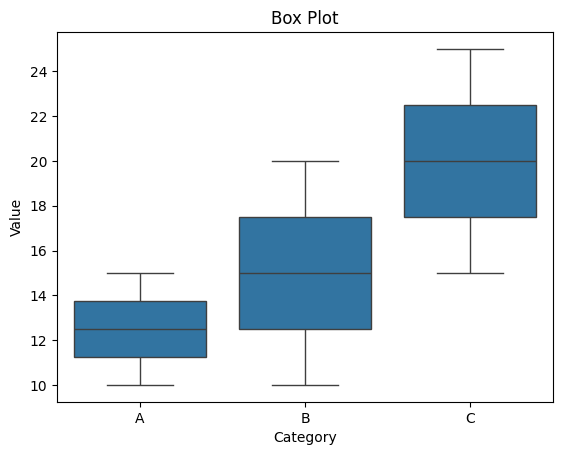

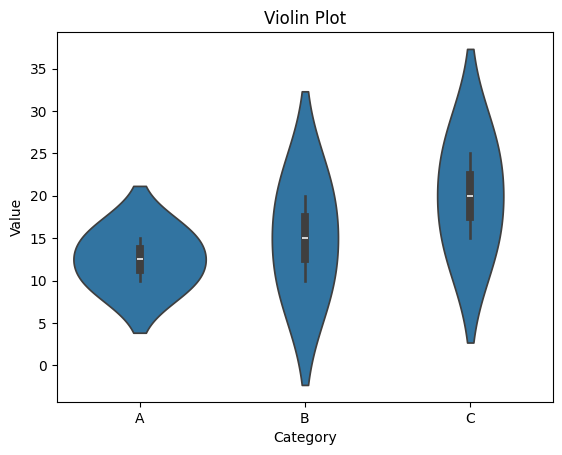

Box Plots and Violin Plots

Explanation: Box plots show the distribution of data based on quartiles and are useful for spotting outliers. Violin plots combine box plots and density plots to provide more detail on the distribution’s shape.

Example Code for Box Plot:

import seaborn as sns

import pandas as pd

# Sample data

df = pd.DataFrame({'Category': ['A', 'A', 'B', 'B', 'C', 'C'],

'Value': [10, 15, 10, 20, 15, 25]})

# Box plot

sns.boxplot(data=df, x='Category', y='Value')

plt.title('Box Plot')

plt.show()

Output

Figure: Box Plot Showing Value Distribution Across Categories

Example Code for Violin Plot:

import seaborn as sns

import pandas as pd

# Sample data

df = pd.DataFrame({'Category': ['A', 'A', 'B', 'B', 'C', 'C'],

'Value': [10, 15, 10, 20, 15, 25]})

# Violin plot

sns.violinplot(data=df, x='Category', y='Value')

plt.title('Violin Plot')

plt.show()

Output

Figure: Violin Plot Highlighting Value Distribution and Density Across Categories

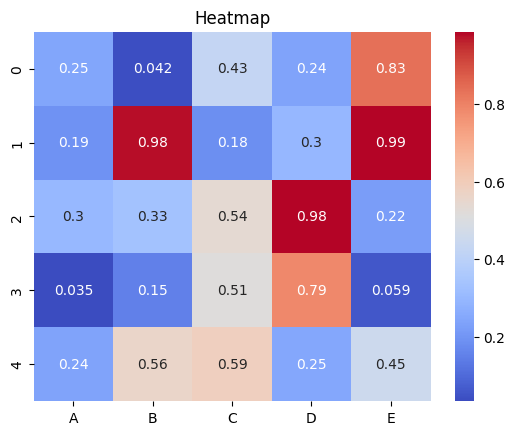

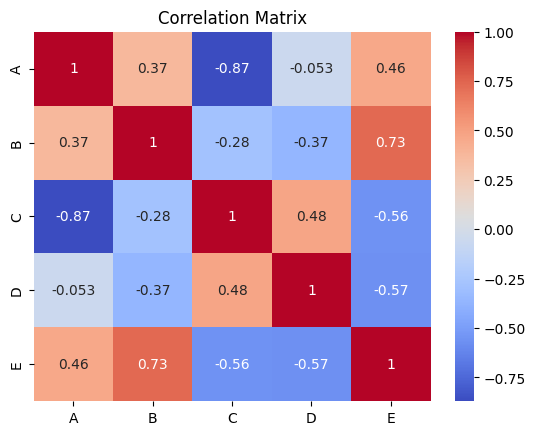

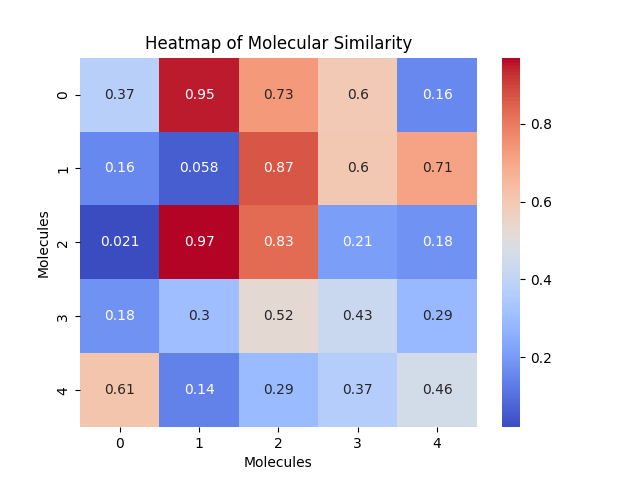

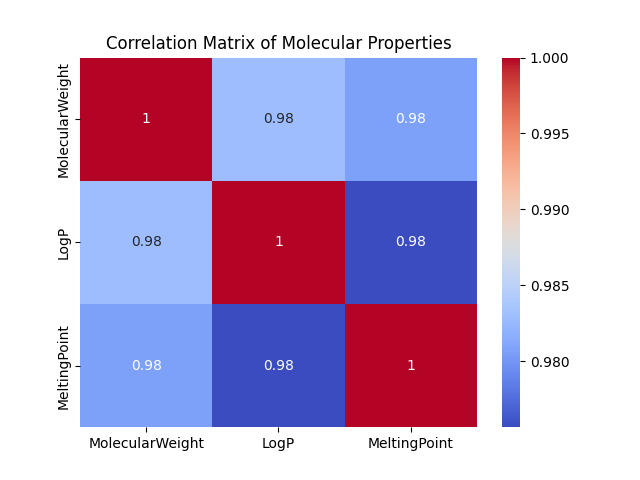

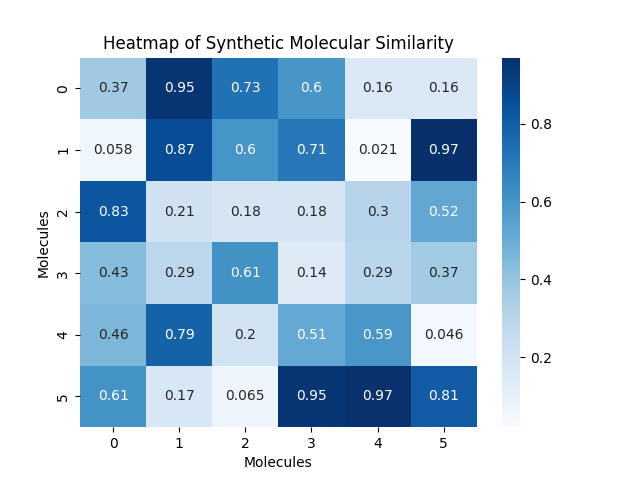

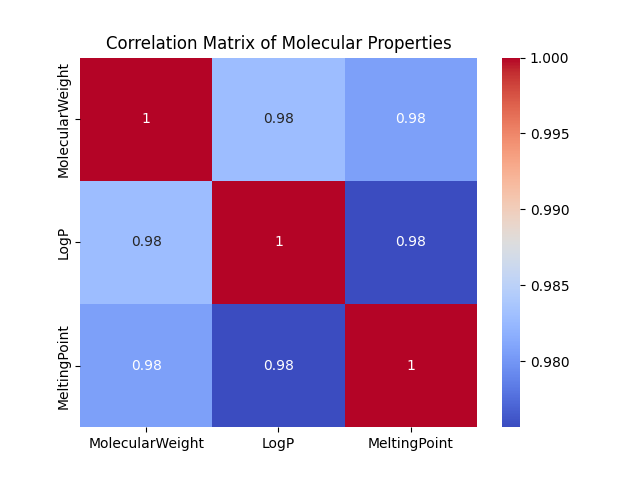

Heatmaps and Correlation Matrices

Explanation: Heatmaps display data as a color-coded matrix. They are often used to show correlations between variables or visualize patterns within data.

Example Code for Heatmap:

import seaborn as sns

import numpy as np

import pandas as pd

# Sample correlation data

data = np.random.rand(5, 5)

df = pd.DataFrame(data, columns=['A', 'B', 'C', 'D', 'E'])

# Heatmap

sns.heatmap(df, annot=True, cmap='coolwarm')

plt.title('Heatmap')

plt.show()

Figure: Heatmap Depicting Data as a Color-Coded Matrix

Example Code for Correlation Matrix:

import seaborn as sns

import numpy as np

import pandas as pd

# Sample correlation data

data = np.random.rand(5, 5)

df = pd.DataFrame(data, columns=['A', 'B', 'C', 'D', 'E'])

# Correlation matrix of a DataFrame

corr_matrix = df.corr()

# Heatmap of the correlation matrix

sns.heatmap(corr_matrix, annot=True, cmap='coolwarm')

plt.title('Correlation Matrix')

plt.show()

Figure: Heatmap Visualizing the Correlation Matrix Across Variables

2.2.6 Statistical Analysis Basics

Completed and Compiled Code: Click Here

Statistical analysis is essential for interpreting data and making informed conclusions. In this section, we’ll explore fundamental statistical techniques using Python, which are particularly useful in scientific research.

Descriptive Statistics

Explanation: Descriptive statistics summarize and describe the main features of a dataset. Common descriptive statistics include the mean, median, mode, variance, and standard deviation.

Example Code:

import pandas as pd

# Load a sample dataset

df = pd.DataFrame({'MolecularWeight': [180, 200, 150, 170, 210],

'BoilingPoint': [100, 110, 95, 105, 120]})

# Calculate descriptive statistics

mean_mw = df['MolecularWeight'].mean()

median_bp = df['BoilingPoint'].median()

std_mw = df['MolecularWeight'].std()

print(f"Mean Molecular Weight: {mean_mw}")

print(f"Median Boiling Point: {median_bp}")

print(f"Standard Deviation of Molecular Weight: {std_mw}")

Output

Mean Molecular Weight: 182.0

Median Boiling Point: 105.0

Standard Deviation of Molecular Weight: 23.874672772626646

Practice Problem 1:

Calculate the mean, median, and variance for the num column in the BBBP dataset.

▶ Show Solution Code

import pandas as pd

# Load the BBBP dataset

url = 'https://raw.githubusercontent.com/Data-Chemist-Handbook/Data-Chemist-Handbook.github.io/refs/heads/master/_pages/BBBP.csv'

df = pd.read_csv(url)

# Calculate the mean, median, and variance

mean_num = df['num'].mean()

median_num = df['num'].median()

variance_num = df['num'].var()

print(f'Mean: {mean_num}, Median: {median_num}, Variance: {variance_num}')

Output

Mean: 1027.3760975609757, Median: 1026.5, Variance: 351455.5290526015

Probability Distributions

Explanation:

Probability distributions are a fundamental concept in data analysis, representing how values in a dataset are distributed across different ranges. One of the most well-known and frequently encountered probability distributions is the normal distribution (also known as the Gaussian distribution).

The normal distribution is characterized by:

- A bell-shaped curve that is symmetric around its mean.

- The majority of values clustering near the mean, with fewer values occurring as you move further away.

- Its shape is determined by two parameters:

- Mean (μ): The central value where the curve is centered.

- Standard deviation (σ): A measure of the spread of data around the mean. A smaller σ results in a steeper, narrower curve, while a larger σ produces a wider, flatter curve.

In cheminformatics, normal distributions can describe various molecular properties, such as bond lengths, molecular weights, or reaction times, especially when the data arises from natural phenomena or measurements.

Why Normal Distributions Matter for Chemists:

- Predicting Properties: A normal distribution can be used to predict probabilities, such as the likelihood of a molecular property (e.g., boiling point) falling within a certain range.

- Outlier Detection: Chemists can identify unusual molecular behaviors or experimental measurements that deviate significantly from the expected distribution.

- Statistical Modeling: Many statistical tests and machine learning algorithms assume that the data follows a normal distribution.

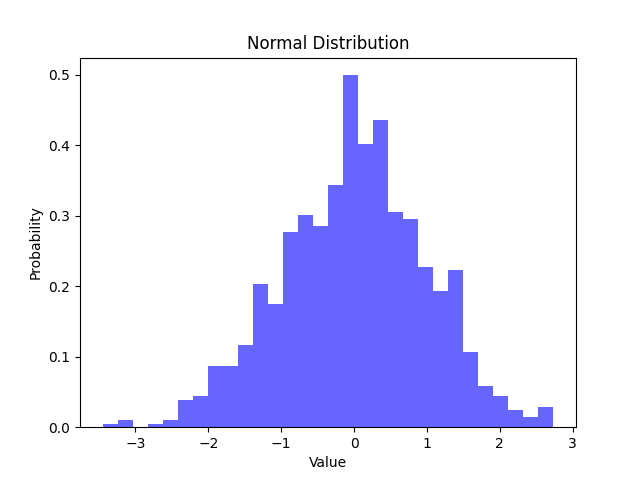

Example Code for Normal Distribution:

This code generates data following a normal distribution and visualizes it with a histogram:

import numpy as np

import matplotlib.pyplot as plt

# Generate data with a normal distribution

data = np.random.normal(loc=0, scale=1, size=1000)

# Plot the histogram

plt.hist(data, bins=30, density=True, alpha=0.6, color='b')

plt.xlabel('Value')

plt.ylabel('Probability')

plt.title('Normal Distribution')

plt.show()

Output

Note: This code can result in a different output every time it is run, since np.random.normal generates random data

What the Code Does:

- Generate Data: The

np.random.normalfunction creates 1000 random data points with:- Mean (

loc): Set to 0. - Standard Deviation (

scale): Set to 1.

- Mean (

- Plot Histogram: The

plt.histfunction divides the data into 30 equal-width bins and plots a histogram:density=Trueensures the histogram represents a probability density function (area under the curve sums to 1).alpha=0.6adjusts the transparency of the bars.color='b'specifies the bar color as blue.

- Labels and Title: The axes are labeled for clarity, and the title describes the chart.

Interpretation:

- The plot shows the majority of values concentrated around 0 (the mean), with the frequency tapering off symmetrically on either side.

- The shape of the curve reflects the standard deviation. Most values (approximately 68%) fall within one standard deviation (μ±σ) of the mean.

Figure: Histogram Depicting a Normal Distribution with Mean 0 and Standard Deviation 1

Applications in Chemistry:

- Molecular Property Analysis: Understand the variation in molecular weights or boiling points for a compound set.

- Error Analysis: Model and visualize experimental errors, assuming they follow a normal distribution.

- Kinetic Studies: Analyze reaction times or rates for processes that exhibit natural variability.

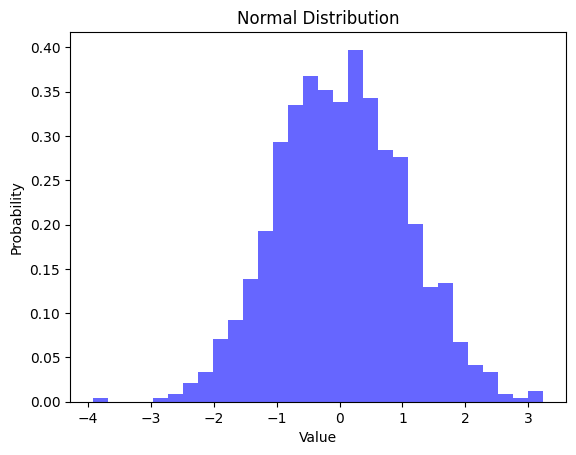

Practice Problem 2:

Generate a normally distributed dataset based on the mean and standard deviation of the num column in the BBBP dataset. Plot a histogram of the generated data.

▶ Show Solution Code

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

# Load the BBBP dataset

url = 'https://raw.githubusercontent.com/Data-Chemist-Handbook/Data-Chemist-Handbook.github.io/refs/heads/master/_pages/BBBP.csv'

df = pd.read_csv(url)

# Generate normally distributed data based on 'num' column

mean_num = df['num'].mean()

std_num = df['num'].std()

normal_data = np.random.normal(mean_num, std_num, size=1000)

# Plot histogram

plt.hist(normal_data, bins=30, density=True, alpha=0.6, color='g')

plt.xlabel('Value')

plt.ylabel('Density')

plt.title('Normal Distribution of num')

plt.show()

Output

Hypothesis Testing

Explanation:

Hypothesis testing is a statistical method used to evaluate whether there is enough evidence in a sample to infer a condition about a population. It is widely used in experimental chemistry to compare groups and draw conclusions about the effects of different treatments or conditions.

Key Concepts:

- Null and Alternative Hypotheses:

- Null Hypothesis (H₀): Assumes no difference between the groups being tested (e.g., the means of two groups are equal).

- Alternative Hypothesis (Hₐ): Assumes there is a difference between the groups (e.g., the means of two groups are not equal).

- T-Test:

- A t-test compares the means of two independent groups to determine if the observed differences are statistically significant.

- It calculates a t-statistic and a p-value to assess the evidence against the null hypothesis.

- Interpreting Results:

- T-Statistic: Measures the difference between group means relative to the variability of the data. Larger values suggest greater differences.

- P-Value: Represents the probability of observing the data assuming H₀ is true. A small p-value (commonly < 0.05) indicates significant differences between groups.

- Why It’s Useful for Chemists:

- Compare reaction yields under different conditions (e.g., catalysts or solvents).

- Evaluate the effectiveness of a new material or treatment compared to a control group.

Example Code for t-test:

from scipy.stats import ttest_ind

import pandas as pd

# Example data

group_a = [1.2, 2.3, 1.8, 2.5, 1.9] # Results for treatment A

group_b = [2.0, 2.1, 2.6, 2.8, 2.4] # Results for treatment B

# Perform t-test

t_stat, p_val = ttest_ind(group_a, group_b)

print(f"T-statistic: {t_stat}, P-value: {p_val}")

Output

T-statistic: -1.6285130624347315, P-value: 0.14206565386214137

What the Code Does:

- Data Input:

group_aandgroup_bcontain measurements from two independent groups (e.g., yields from two catalysts).

- T-Test Execution:

- The

ttest_ind()function performs an independent two-sample t-test to compare the means of the two groups.

- The

- Output:

- T-Statistic: Quantifies the difference in means relative to data variability.

- P-Value: Indicates whether the observed difference is statistically significant.

Interpretation:

- The t-statistic is -1.63, indicating that the mean of

group_ais slightly lower than the mean ofgroup_b. - The p-value is 0.14, which is greater than 0.05. This means we fail to reject the null hypothesis and conclude that there is no statistically significant difference between the two groups.

Applications in Chemistry:

- Catalyst Comparison:

- Determine if two catalysts produce significantly different yields or reaction rates.

- Material Testing:

- Evaluate whether a new material significantly improves a property (e.g., tensile strength, thermal stability) compared to a standard material.

- Experimental Conditions:

- Test whether changes in temperature, pressure, or solvent lead to meaningful differences in reaction outcomes.

Important Considerations:

- Ensure the data meets the assumptions of a t-test:

- Independence of groups.

- Approximately normal distribution.

- Similar variances (use Welch’s t-test if variances differ).

- For multiple group comparisons, consider using ANOVA instead of a t-test.

By using hypothesis testing, chemists can make statistically supported decisions about experimental results and conditions.

Practice Problem 3:

In the BBBP dataset, compare the mean num values between permeable (p_np=1) and non-permeable (p_np=0) compounds using a t-test.

▶ Show Solution Code

from scipy.stats import ttest_ind

import pandas as pd

# Load the BBBP dataset

url = 'https://raw.githubusercontent.com/Data-Chemist-Handbook/Data-Chemist-Handbook.github.io/refs/heads/master/_pages/BBBP.csv'

df = pd.read_csv(url)

# Separate data by permeability

permeable = df[df['p_np'] == 1]['num']

non_permeable = df[df['p_np'] == 0]['num']

# Perform t-test

t_stat, p_val = ttest_ind(permeable, non_permeable)

print(f"T-statistic: {t_stat}, P-value: {p_val}")

Output

Correlation and Regression

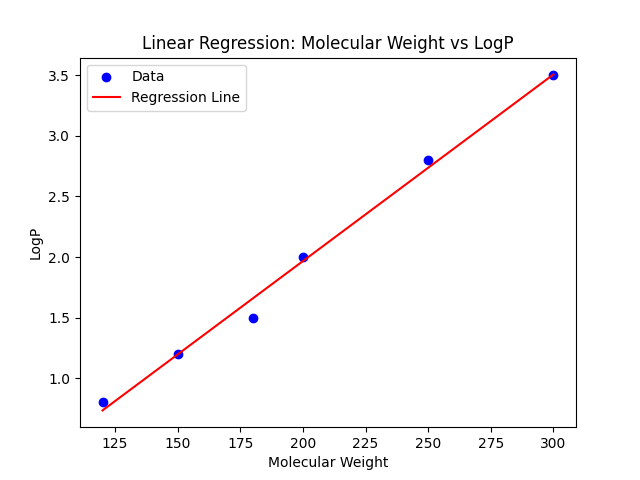

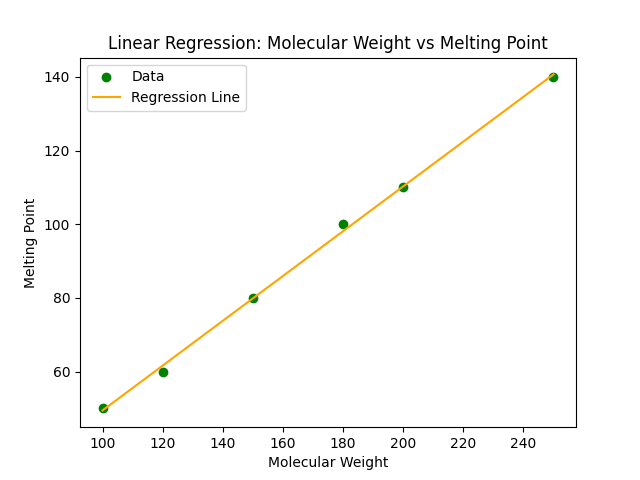

Explanation:

Correlation and regression are statistical tools used to analyze relationships between variables. These methods are crucial for chemists to understand how different molecular properties are related and to make predictions based on data.

Correlation:

- Definition: Correlation quantifies the strength and direction of a linear relationship between two variables.

- Range: The correlation coefficient (r) ranges from -1 to 1:

- r = 1: Perfect positive correlation (as one variable increases, the other also increases proportionally).

- r = -1: Perfect negative correlation (as one variable increases, the other decreases proportionally).

- r = 0: No correlation.

- Use in Chemistry: For example, correlation can reveal whether molecular weight is related to boiling point in a set of compounds.

Regression:

- Definition: Regression predicts the value of a dependent variable based on one or more independent variables.

- Types: Simple linear regression (one independent variable) and multiple linear regression (two or more independent variables).

- Output:

- Regression Coefficient (β): Indicates the magnitude and direction of the relationship between the independent variable and the dependent variable.

- Intercept (α): Represents the predicted value of the dependent variable when the independent variable is zero.

- Use in Chemistry: Regression can predict molecular properties, such as boiling point, based on easily measurable features like molecular weight.

Example Code for Correlation and Linear Regression:

import pandas as pd

import seaborn as sns

from scipy.stats import pearsonr

from sklearn.linear_model import LinearRegression

# Example data

df = pd.DataFrame({'MolecularWeight': [180, 200, 150, 170, 210],

'BoilingPoint': [100, 110, 95, 105, 120]})

# Calculate correlation

corr, _ = pearsonr(df['MolecularWeight'], df['BoilingPoint'])

print(f"Correlation: {corr}")

# Linear regression

X = df[['MolecularWeight']] # Independent variable

y = df['BoilingPoint'] # Dependent variable

model = LinearRegression().fit(X, y)

# Regression results

print(f"Regression coefficient: {model.coef_[0]}")

print(f"Intercept: {model.intercept_}")

Output

Correlation: 0.9145574682496187

Regression coefficient: 0.36842105263157904

Intercept: 38.947368421052616

What the Code Does:

- Input Data:

- The DataFrame contains molecular weight and boiling point values for five molecules.

- Correlation:

- The

pearsonrfunction calculates the Pearson correlation coefficient (r) between molecular weight and boiling point. - Example output: If r = 0.95, it indicates a strong positive linear relationship.

- The

- Regression:

- The

LinearRegressionclass models the relationship between molecular weight (independent variable) and boiling point (dependent variable). - Key Outputs:

- Regression Coefficient: Shows how much the boiling point changes for a one-unit increase in molecular weight.

- Intercept: Indicates the boiling point when the molecular weight is zero.

- The

Interpretation:

- If the correlation coefficient is high (close to 1 or -1), it suggests a strong linear relationship.

- The regression coefficient quantifies the strength of this relationship, and the intercept gives the baseline prediction.

Applications in Chemistry:

- Molecular Property Prediction:

- Predict boiling points of new compounds based on molecular weight or other properties.

- Quantitative Structure-Property Relationships (QSPR):

- Use regression to model how structural features influence chemical properties like solubility or reactivity.

- Experimental Design:

- Understand relationships between variables to guide targeted experiments.

Practice Problem 4:

Calculate the correlation between num and p_np in the BBBP dataset. Then, perform a linear regression to predict num based on p_np.

▶ Show Solution Code

import pandas as pd

from scipy.stats import pearsonr

from sklearn.linear_model import LinearRegression

# Load the BBBP dataset

url = 'https://raw.githubusercontent.com/Data-Chemist-Handbook/Data-Chemist-Handbook.github.io/refs/heads/master/_pages/BBBP.csv'

df = pd.read_csv(url)

# Calculate correlation

corr, _ = pearsonr(df['num'], df['p_np'])

print(f"Correlation between num and p_np: {corr}")

# Linear regression

X = df[['p_np']]

y = df['num']

model = LinearRegression().fit(X, y)

print(f"Regression coefficient: {model.coef_[0]}")

print(f"Intercept: {model.intercept_}")

Output

Correlation between num and p_np: 0.43004111834348224

Regression coefficient: 600.5995724446169

Intercept: 568.2836438923343

ANOVA (Analysis of Variance)

Explanation:

ANOVA (Analysis of Variance) is a statistical method used to determine if there are significant differences between the means of three or more independent groups. It helps chemists evaluate whether variations in a continuous variable (e.g., melting point or reaction yield) are influenced by a categorical variable (e.g., types of catalysts or reaction conditions).

Key Concepts:

- Groups and Variability:

- ANOVA compares the variability within each group to the variability between groups.

- If the variability between groups is significantly larger than the variability within groups, it suggests that the group means are different.

- Hypotheses:

- Null Hypothesis (H₀): All group means are equal.

- Alternative Hypothesis (Hₐ): At least one group mean is different.

- F-Statistic:

- The F-statistic is calculated as the ratio of between-group variability to within-group variability.

- A larger F-statistic indicates a greater likelihood of differences between group means.

- P-Value:

- The p-value indicates the probability of observing the F-statistic if H₀ is true.

- A small p-value (typically < 0.05) leads to rejecting H₀, suggesting that group means are significantly different.

Why It’s Useful for Chemists:

- ANOVA can identify whether different conditions (e.g., catalysts, solvents, or temperatures) significantly affect a property of interest, such as yield, rate, or stability.

Example Code for ANOVA:

from scipy.stats import f_oneway

# Example data for three groups

group1 = [1.1, 2.2, 3.1, 2.5, 2.9] # Data for condition 1

group2 = [2.0, 2.5, 3.5, 2.8, 3.0] # Data for condition 2

group3 = [3.1, 3.5, 2.9, 3.6, 3.3] # Data for condition 3

# Perform ANOVA

f_stat, p_val = f_oneway(group1, group2, group3)

print(f"F-statistic: {f_stat}, P-value: {p_val}")

Output:

F-statistic: 3.151036525172754, P-value: 0.07944851235243751

What the Code Does:

- Data Input:

- Three groups of data represent different experimental conditions (e.g., three catalysts tested for their effect on reaction yield).

- ANOVA Test:

- The

f_oneway()function performs a one-way ANOVA test to determine if there are significant differences between the group means.

- The

- Results:

- F-Statistic: Measures the ratio of between-group variability to within-group variability.

- P-Value: If this is below a threshold (e.g., 0.05), it suggests that the differences in means are statistically significant.

Interpretation:

- The p-value (0.08) is greater than 0.05, so we cannot reject the null hypothesis.

- This indicates that it isn’t statistically significant from the others.

Applications in Chemistry:

- Catalyst Screening:

- Compare reaction yields across multiple catalysts to identify the most effective one.

- Reaction Optimization:

- Evaluate the effect of different temperatures, solvents, or reaction times on product yield or purity.

- Material Properties:

- Analyze differences in tensile strength or thermal stability across materials produced under different conditions.

- Statistical Quality Control:

- Assess variability in product quality across batches.

By using ANOVA, chemists can draw statistically sound conclusions about the effects of categorical variables on continuous properties, guiding decision-making in experimental designs.

Practice Problem 5:

Group the num column in the BBBP dataset by the first digit of num (e.g., 1XX, 2XX, 3XX) and perform an ANOVA test to see if the mean values differ significantly among these groups.

▶ Show Solution Code

from scipy.stats import f_oneway

import pandas as pd

# Load the BBBP dataset

url = 'https://raw.githubusercontent.com/Data-Chemist-Handbook/Data-Chemist-Handbook.github.io/refs/heads/master/_pages/BBBP.csv'

df = pd.read_csv(url)

# Group 'num' by the first digit

group1 = df[df['num'].between(100, 199)]['num']

group2 = df[df['num'].between(200, 299)]['num']

group3 = df[df['num'].between(300, 399)]['num']

# Perform ANOVA

f_stat, p_val = f_oneway(group1, group2, group3)

print(f"F-statistic: {f_stat}, P-value: {p_val}")

Output

Section 2.2 – Quiz Questions

1) Factual Questions

Question 1

You’re analyzing a large toxicity dataset with over 50 different biological and chemical metrics (columns) for each compound. To summarize complex information, such as average assay scores grouped by molecular weight range or chemical class, which of the following functions would be most useful?

A. Merge the dataset

B. Normalize the dataset

C. Remove duplicates

D. Create a pivot table to reshape the dataset

▶ Click to show answer

Correct Answer: D▶ Click to show explanation

Explanation: Pivot tables help chemists aggregate results (e.g., toxicity scores by chemical class or molecular descriptor bins), making it easier to spot trends, compare subgroups, and prepare the data for downstream modeling or visualization.Question 2

You’re training a machine learning model to predict compound toxicity. Your dataset includes a categorical feature called “TargetClass” that describes the biological target type (e.g., enzyme, receptor, transporter). Why is encoding this categorical column necessary before model training?

A. It removes unnecessary data from the dataset.

B. Machine learning models require numerical inputs to process categorical data effectively.

C. Encoding increases the number of categorical variables in a dataset.

D. It automatically improves model accuracy without additional preprocessing.

▶ Click to show answer

Correct Answer: B▶ Click to show explanation

Explanation: Encoding converts non-numeric data (e.g., categories) into numerical values so that machine learning models can process them. Common methods include one-hot encoding and label encoding. Most machine learning algorithms can't handle raw text or labels as inputs. Encoding (e.g., one-hot or label encoding) translates categories into numeric form, allowing the model to interpret class differences and make predictions based on them — a common step when working with descriptors like compound type, target family, or assay outcome.Question 3

You’re working with a dataset containing results from multiple bioassays for various compounds. Each row contains a compound ID, assay name, and response value. You want to summarize this dataset so that each compound has one row, and the assay names become columns.

A. Pivot tables are used to remove missing values from a dataset.

B. The pivot_table() function is used to summarize and reorganize data by converting rows into columns.

C. The melt() function is used to create a summary table by aggregating numerical data.

D. A pivot table can only be created using categorical data as values.

▶ Click to show answer

Correct Answer: B▶ Click to show explanation

Explanation: In cheminformatics, pivot_table() is especially useful for converting assay results from long to wide format, where each assay becomes a separate column. This transformation is common before merging descriptor data or building a machine learning dataset.Question 4

You are preparing spectral data from a library of compounds for training a new ML algorithm and you decide to use Min-Max normalization. What is the primary reason for doing this?

A. To standardize solvent types across experiments

B. To rescale numerical values into a common range (e.g., 0 to 1)

C. To eliminate duplicate solubility measurements

D. To convert string units into numerical ones

▶ Click to show answer

Correct Answer: B▶ Click to show explanation

Explanation: Min-Max normalization rescales values to a fixed range (often 0 to 1), making it easier to compare results from multiple spectra for different compounds in different concentrations.Question 5

You are working with a dataset of reaction yields, but the yield values are stored as strings (e.g., '85', '90'). You need to compute averages for reporting. What function should you use?

A. df.rename()

B. df.agg()

C. df.astype()

D. df.to_csv()

▶ Click to show answer

Correct Answer: C▶ Click to show explanation

Explanation: `astype()` is used to convert a column's data type, such as from string to float or integer, so mathematical operations can be performed.2) Comprehension / Application Questions

Question 6

You have just received a dataset regarding the toxicity of commonly used compounds (TOX21) and would like to get an idea of the metrics in the dataset.

Task: Read the TOX21.csv dataset into a DataFrame and print the first five rows. Which of the following matches your third compound in the output?

A. Diethyl oxalate

B. Dimethyl dihydrogen diphosphate

C. Dimethylglyoxime

D. 4’-Methoxyacetophenone

▶ Click to show answer

Correct Answer: C▶ Click to show explanation

Explanation: The third row of the dataset contains Dimethylglyoxime, based on the output of `df.head()`.▶ Show Solution Code

import pandas as pd

url = "https://raw.githubusercontent.com/Data-Chemist-Handbook/Data-Chemist-Handbook.github.io/refs/heads/master/_pages/Chemical%20List%20tox21sl-2025-02-20.csv"

df = pd.read_csv(url)

print(df.head())

Question 7

After looking at the TOX21 dataset, you realize that there are missing values in rows and duplicate rows.

To fix this problem, you should handle the missing values by using ___ and get rid of duplicate rows by ___.

A. df.fillna('unknown', inplace=True), df.drop_duplicates()

B. df.fillna(0, inplace=False), df.drop_duplicates(inplace=True)

C. df.dropna(inplace=True), df.drop_duplicates()

D. df.fillna('missing', inplace=True), df.drop_duplicates(inplace=True)

▶ Click to show answer

Correct Answer: A▶ Click to show explanation

Explanation: `fillna('unknown')` fills missing values with a placeholder, maintaining the dataset's size. `drop_duplicates()` removes any repeated rows to ensure clean data.Question 8

Which function and code would allow you to create a new column that represents the average of the boiling points 'bp' in your dataset?

A. Grouping; df['avg_bp'] = df['bp'].mean()

B. Grouping; df['avg_bp'] = df['bp'].sum() / len(df)

C. Merging; df['avg_bp'] = df['bp'].apply(lambda x: sum(x) / len(x))

D. Merging; df['avg_bp'] = df.apply(lambda row: row['bp'].mean(), axis=1)

▶ Click to show answer

Correct Answer: A▶ Click to show explanation

Explanation: The `.mean()` method calculates the column-wise average, and assigning it to a new column applies that single value across all rows.Question 9

You want to perform an ANOVA statistical analysis to evaluate the activity of the compounds listed in the TOX21 dataset.

Task:

Determine whether the average molecular mass of compounds with ToxCast Active Ratio above 20% is statistically different from those below 20%.

- Use a 95% confidence level (α = 0.05).

- Calculate the F-statistic and P-value to assess significance.

Question:

Are these two groups statistically significant at the 95% confidence level? What are the F-statistic and P-value?

A. Yes, F-Statistic: 203.89, P-Value: 0.03

B. Yes, F-Statistic: 476.96, P-Value: 0.00

C. No, F-Statistic: 78.09, P-Value: 0.09

D. No, F-Statistic: 548.06, P-Value: 0.10

▶ Click to show answer

Correct Answer: B▶ Click to show explanation